Politics, culture, and global warming

John Rennie

The tangled knot of politics, culture, and perceptions of the science behind climate change has been the topic of a series of my recent posts at The Gleaming Retort.

Read MoreYou are welcome to email me directly: my first name at JohnRennie.net. Or better yet, use the form at right. Please include appropriate contact information for yourself.

Thanks very much for your interest. I will respond to your message as soon as possible.

123 Street Avenue, City Town, 99999

(123) 555-6789

email@address.com

You can set your address, phone number, email and site description in the settings tab.

Link to read me page with more information.

Filtering by Category: Neuroscience

The tangled knot of politics, culture, and perceptions of the science behind climate change has been the topic of a series of my recent posts at The Gleaming Retort.

Read MoreSome epidemiologists are growing concerned about an epidemic similar to mad cow disease that may have been gaining momentum for decades in the wild deer, elk, and caribou populations.

Read More

Over at Neurotic Physiology—one of the spiffy new Scientopia blogs, as you surely already know, right, pardner?—Scicurious offers a helpful primer, in text and diagrams, on the basics of neurotransmission. In closing, she remarks:

What boggles Sci’s mind is the tiny scale on which this is happening (the order of microns, a micron is 0.000001m), and the SPEED. This happens FAST. Every movement of your fingers requires THOUSANDS of these signals. Every new fact you learn requires thousands more. Heck, every word you are looking at, just the ACT of LOOKING and visual signals coming into your brain. Millions of signals, all over the brain, per second. And out of each tiny signal, tiny things change, and those tiny changes determine what patterns are encoded and what are not. Those patterns can determine something like what things you see are remembered or not. And so, those millions of tiny signals will determine how you do on your calculus test, whether you swerve your car away in time to miss the stop sign, and whether you eat that piece of cake. If that’s not mind-boggling, what IS?!

Indeed so. The dance of molecule-size entities and their integration into the beginning or end of a neural signal happens so dizzyingly fast it defies the imagination. And yet what happens in between a neuron's receipt of a signal and its own release of one—the propagation of an action potential along the length of a neuron's axon—can be incredibly slow by comparison. Witness a wonderful description by Johns Hopkins neuroscientist David Linden, which science writer JR Minkel calls "The most striking science analogy I've ever heard." I won't steal the thunder of JR's brief post by quoting Linden's comment, but read it and you'll see: that's slow!

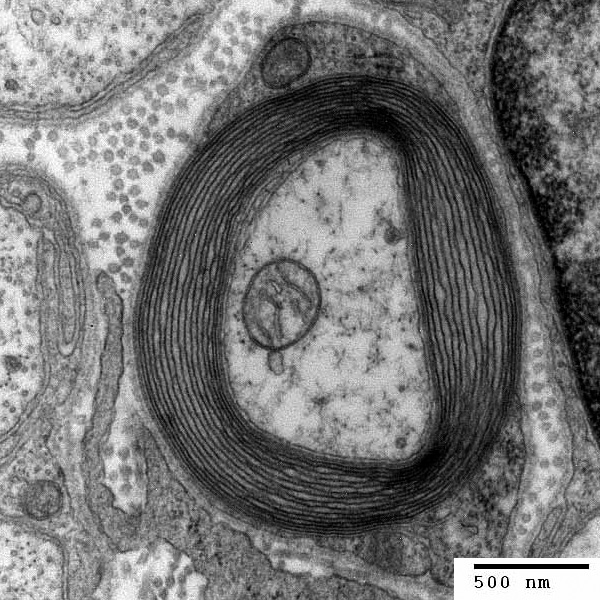

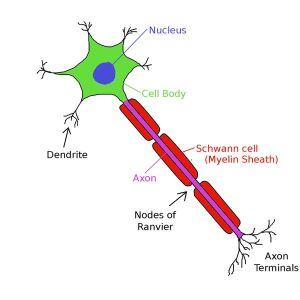

Remember, too, that in Linden's thought experiment, the giant's transatlantic nerves are presumably myelinated (because I'm assuming that even planet-spanning giants still count as mammals). That is, her nerves are segmentally wrapped in fatty myelin tissue, which electrically insulates them and has the advantage of accelerating the propagation speed of action potentials.

Most invertebrates have unmyelinated axons: in them, action potentials move as a smooth, unbroken wave of electrical activity along the axon's length. In the myelinated axons of mammals and other vertebrates—which apparently have more need of fast, energy-efficient neurons—the action potential jumps along between the nodes separating myelinated stretches of membrane: the depolarization of membrane at one node triggers depolarization at the next node, and so. Because of this jumpy mode of transmission (or saltatory conductance, to use the technical term), the action potentials race along in myelinated neurons at speeds commonly between 10 and 120 meters per second, whereas unmyelinated neurons can often only manage between 5 and 25 meters per second.

Roughly speaking, myelination seems to increase the propagation speed of axon potentials about tenfold. The speed of an action potential also increases with the diameter of an axon, however, which is why neurons that need to conduct signals very rapidly tend to be fatter. (Myelinless invertebrates can therefore compensate for the inefficiency of their neurons by making them thicker.) I suppose that someone who wants to be a killjoy for Linden's great giant analogy could question whether his calculation of the neural transmission speeds took into account how much wider the giant's neurons should be, too: presumably, they should be orders of magnitude faster than those of normal-size humans.

Roughly speaking, myelination seems to increase the propagation speed of axon potentials about tenfold. The speed of an action potential also increases with the diameter of an axon, however, which is why neurons that need to conduct signals very rapidly tend to be fatter. (Myelinless invertebrates can therefore compensate for the inefficiency of their neurons by making them thicker.) I suppose that someone who wants to be a killjoy for Linden's great giant analogy could question whether his calculation of the neural transmission speeds took into account how much wider the giant's neurons should be, too: presumably, they should be orders of magnitude faster than those of normal-size humans.

So I'll throw out these two questions for any enterprising readers who would like to calculate the answers:

Update (added 8/24): Noah Gray, senior editor at the Nature Publishing Group, notes that Sci accidentally overstated the size of the synaptic cleft: it's actually on the order of 20 nanometers on average, not microns. Point taken, and thanks for the correction! Sci may well have fixed that by now in her original, but I append it here for anyone running into the statement here first. Further amendment to my update: Actually, on reflection, in her reference to microns, Sci may have been encompassing events beyond the synaptic cleft itself and extending into the presynaptic and postsynaptic neurons (for example, the movement of the vesicles of neurotransmitter). So rather than my referring to this as a mistake, I'll thank Noah for the point about the size of the cleft and leave it to Sci to clarify or not, as she wishes.

Ray Kurzweil, the justly lauded inventor and machine intelligence pioneer, has been predicting that humans will eventually upload their minds into computers for so long that I think his original audience wondered whether a computer was a type of fancy abacus. It simply isn’t news for him to say it anymore, and since nothing substantive has happened recently to make that goal any more imminent, there’s just no good excuse for Wired to still be running articles like this:

Reverse-engineering the human brain so we can simulate it using computers may be just two decades away, says Ray Kurzweil, artificial intelligence expert and author of the best-selling book The Singularity is Near.It would be the first step toward creating machines that are more powerful than the human brain. These supercomputers could be networked into a cloud computing architecture to amplify their processing capabilities. Meanwhile, algorithms that power them could get more intelligent. Together these could create the ultimate machine that can help us handle the challenges of the future, says Kurzweil.

This article doesn’t explicitly refer to Kurzweil’s inclusion of uploading human consciousness into computers as part of his personal plan for achieving immortality. That’s good, because the idea has already been repeatedly and bloodily drubbed—by writer John Pavlus and by Glenn Zorpette, executive editor of IEEE Spectrum, to take just two recent examples. (Here are audio and a transcription of a conversation between Zorpette, writer John Horgan and Scientific American’s Steve Mirsky that further kicks the dog. And here's a link to Spectrum's terrific 2008 special report that puts the idea of the Singularity in perspective.)

Instead, the Wired piece restricts itself to the technological challenge of building a computer capable of simulating a thinking, human brain. As usual, Kurzweil rationalizes this accomplishment by 2030 by pointing to exponential advances in technology, as famously embodied by Moore’s Law, and this bit of biological reductionism:

A supercomputer capable of running a software simulation of the human brain doesn’t exist yet. Researchers would require a machine with a computational capacity of at least 36.8 petaflops and a memory capacity of 3.2 petabytes ….

<…>

Sejnowski says he agrees with Kurzweil’s assessment that about a million lines of code may be enough to simulate the human brain.

Here’s how that math works, Kurzweil explains: The design of the brain is in the genome. The human genome has three billion base pairs or six billion bits, which is about 800 million bytes before compression, he says. Eliminating redundancies and applying loss-less compression, that information can be compressed into about 50 million bytes, according to Kurzweil.

About half of that is the brain, which comes down to 25 million bytes, or a million lines of code.

First, quantitative estimates of the information processing and storage capacities of the brain are all suspect for the simple reason that no one yet understands how nervous systems work. Science has detailed information about neural signaling, and technologies such as fMRI and optogenetics are yielding better information all the time about how the brain’s circuitry produces thoughts, memories and behaviors, but these still fall far short of telling us how brains do anything of interest. Models that treat neurons like transistors and action potentials like digital signals may be too deficient for the job.

But let’s stipulate that some numerical estimate is correct, because mental activities do have to come from physical processes somehow, and those can be quantified and modeled. What about Kurzweil’s premise that “The design of the brain is in the genome”?

In short, no. I was gearing up to explain why that statement is wrong, but then discovered that PZ Myers had done a far better job of refuting it than I could. Read it all for the full force of the rebuttal, but here’s a taste that captures the essence of what’s utterly off kilter:

It's design is not encoded in the genome: what's in the genome is a collection of molecular tools wrapped up in bits of conditional logic, the regulatory part of the genome, that makes cells responsive to interactions with a complex environment. The brain unfolds during development, by means of essential cell:cell interactions, of which we understand only a tiny fraction. The end result is a brain that is much, much more than simply the sum of the nucleotides that encode a few thousand proteins. [Kurzweil] has to simulate all of development from his codebase in order to generate a brain simulator, and he isn't even aware of the magnitude of that problem.

We cannot derive the brain from the protein sequences underlying it; the sequences are insufficient, as well, because the nature of their expression is dependent on the environment and the history of a few hundred billion cells, each plugging along interdependently. We haven't even solved the sequence-to-protein-folding problem, which is an essential first step to executing Kurzweil's clueless algorithm. And we have absolutely no way to calculate in principle all the possible interactions and functions of a single protein with the tens of thousands of other proteins in the cell!

Lay Kurzweil’s error alongside the others at the feet of biology’s most flawed metaphor: that DNA is the blueprint for life.

What this episode ought to call into question for reporters and editors—and yet I doubt that it will—is how reliable or credible Kurzweil’s technological predictions are. Others have evaluated his track record in the past, but I’ll have more to say on it later. For now, in closing I’ll simply borrow this final barb from John Pavlus’s wonderfully named Guns and Elmo site (he’s also responsible for the Rapture of the Nerds image I used as an opener.

How to Make a Singularity

Step 1: “I wonder if brains are just like computers?”

Step 2: Add peta-thingies/giga-whatzits; say “Moore’s Law!” a lot at conferences

Step 3: ??????

Step 4: SINGULARITY!!!11!one

Added later (5:10 pm): I should note that Kurzweil acknowledges his numeric extrapolations of engineering capabilities omit that even “a perfect simulation of the human brain or cortex won’t do anything unless it is infused with knowledge and trained.” Translation: we’ll have the hardware, but we won’t necessarily have the software. And I guess his statement that “Our work on the brain and understanding the mind is at the cutting edge of the singularity” is his way of saying that creating the right software will be hard.

No doubt his admission is supposed to make me as a reader feel that Kurzweil is only being forthcoming and honest, but in fact it might be the most infuriating part of the article. Computers without the appropriate software might as well be snow globes. As a technologist, Kurzweil knows that better than most of us. So he should also know that neuroscientists’ still primitive understanding of how the brain solves problems, stores and recalls memories, generates consciousness or performs any of the other feats that make it interesting largely moots his how-fast-will-supercomputers-be argument. And yet he makes it anyway.